Implementing a U.S. State-Sponsored AI “Colossus” (2025–2030)

Introduction

The United States is on the cusp of deploying a state-sponsored artificial intelligence system for domestic governance—an integrated, centralized-but-distributed infrastructure reminiscent of the fictional “Colossus”. This prospective national AI platform would leverage cutting-edge machine learning across government and society, aiming to enhance decision-making, security, and economic efficiency. Over the next five years (2025–2030), the U.S. could methodically build such a system, linking federal agencies, private tech companies, and regional data hubs. This report examines the rationale for a national AI, the key stakeholders involved, the required infrastructure, plausible implementation models, and the societal consequences. Early signals from ongoing AI infrastructure projects in Texas, Tennessee, Virginia, and California offer case studies to inform this analysis. The tone throughout is analytical and sober, recognizing both the potential benefits and the profound ethical and societal questions raised by a “Colossus” AI governing domestic affairs.

Rationale for a National AI System

A U.S. national AI system would not emerge in a vacuum—it is driven by converging political, technological, and economic pressures. Understanding these motives provides context for why such a system might be pursued now.

Political and Security Drivers

Politically, maintaining an edge in AI has become a matter of national security and geopolitical rivalry. U.S. leaders argue that global dominance in AI is essential to “promote human flourishing, economic competitiveness, and national security”whitehouse.gov

. In particular, the intensifying U.S.–China AI race is a catalyst: officials warn that if America falls behind, the repercussions for national power and democratic values would be severefedscoop.com

. There is concern that China’s state-driven AI initiatives (from mass surveillance to AI-enhanced military systems) could outpace the U.S., so Washington feels pressure to respond in kind. Former White House advisors have stressed an innovation-centric approach—“We have to out-innovate the competition…our founders have to be more innovative than our counterparts”fedscoop.com

. A national AI system, tightly integrated with security agencies, could help detect domestic threats, prevent terrorism, and manage crises. Post-9/11 information-sharing reforms (like fusion centers, discussed later) laid groundwork for data integration; advanced AI could turbocharge analysis of intelligence, social media, and public safety data to anticipate problems (e.g. spotting extremist plots or pandemic outbreaks) before they manifest. Politically, some also see a national AI as a tool to reinforce social order—using predictive analytics to monitor dissidents and protest activity much as authoritarian regimes have donebrookings.edu

. This raises profound civil liberty concerns (addressed under societal consequences), yet the allure of AI-enhanced control is undoubtedly a factor in the political rationale.

Technological Imperative

Technologically, the opportunity and need for a national AI system stem from recent breakthroughs in AI capabilities coupled with the infrastructure required to support them. Transformative AI models like GPT-4/5 or multi-modal systems can ingest vast data streams and provide analysis or recommendations at a scale impossible for human bureaucracies. However, harnessing such models in governance demands enormous computing power and integration across many domains. The U.S. tech sector has led in foundational AI research, but scaling up implementation is critical. As one White House order stated, “with the right Government policies, we can solidify our position as the global leader in AI”whitehouse.gov

– implying a proactive effort to build AI systems at national scale. A centralized-yet-distributed AI infrastructure would allow the government to exploit cutting-edge AI in every region and agency. This includes deploying large neural networks for tasks like real-time analysis of security camera feeds, predictive modeling for resource allocation, or natural language interfaces for public services. The technological rationale also involves unifying currently siloed AI efforts. By 2023, nearly half of U.S. federal agencies had experimented with AI tools, and a Government Accountability Office survey found roughly 1,200 distinct AI use cases in federal agencies by 2023epic.org

. These range from law enforcement analytics to benefits administration. Today, many are small-scale or isolated pilots. A national system would coordinate and scale these, avoiding duplication and ensuring standards (for accuracy, bias mitigation, etc.). Proponents argue that a unified platform could be more secure and controllable, reducing risks of disparate AI systems making unchecked decisions. Additionally, a state-sponsored “Colossus” could incorporate the latest private-sector innovations (advanced algorithms from firms like OpenAI or Google) in a secure government cloud, accelerating tech transfer from lab to field. In short, the technological rationale is about achieving scale, integration, and cutting-edge capability in domestic AI deployment, which piecemeal efforts lack.

Economic and Competitiveness Rationale

From an economic perspective, a national AI initiative promises to spur investment, create jobs, and ensure U.S. leadership in the booming AI industry. Just as the interstate highway or space programs had spillover benefits, a federal AI infrastructure project could stimulate high-tech development across the country. Massive capital is already flowing into AI infrastructure: for instance, Amazon alone invested $75 billion in data centers in Northern Virginia since 2011, adding $24 billion to the state’s GDPsemafor.com

. A coordinated national project would multiply such gains across multiple states. Public-private partnerships in AI could bolster U.S. companies’ global competitiveness by guaranteeing them a large, stable domestic market (e.g. government contracts for cloud computing or AI services). The U.S. government’s commitment to sustain “American leadership in artificial intelligence” also ties into ensuring long-term economic dominancewhitehouse.gov

. Policymakers see AI as a general-purpose technology that will boost productivity in sectors from logistics to healthcare. Deploying AI nationally in governance could save costs (through automation of routine tasks) and improve efficiency (through better data-driven decisions), potentially yielding economic benefits that justify the upfront investment. Internationally, large-scale AI infrastructure is viewed as strategic infrastructure for the 21st-century economy—analogous to railroads or telecommunications in earlier eras. If the U.S. doesn’t build it, another nation might set global standards. Already, allies and adversaries are moving: reports indicate China’s AI models are rapidly closing the gap with U.S. modelsrand.org

and the EU is crafting its regulatory framework. By heavily investing now (for example, via initiatives like the CHIPS and Science Act funding for AI-related tech), the U.S. can shape the playing field. A domestic “Colossus” system could also act as a testbed for AI integration in a democracy—an experiment to prove that advanced AI can be harnessed for societal good without undermining freedoms. Economically, it might avert the costs of falling behind (lost markets, jobs moving overseas, talent brain drain). Finally, supporters point to competitive collaboration: a national AI system would rely on and reinforce the American tech ecosystem—from semiconductor manufacturers to cloud providers—cementing the country’s supply chains and reducing dependence on foreign technology.

Key Stakeholders: Public and Private Actors

Building a U.S. state-sponsored AI governance system will require a consortium of stakeholders spanning government agencies and private industry. Each brings unique resources and interests:

- Federal Security Agencies: The Department of Homeland Security (DHS) and Department of Defense (DoD) are pivotal public stakeholders. DHS (along with sub-agencies like FEMA, TSA, ICE, and the FBI through the DOJ) would use the AI for homeland surveillance, threat detection, immigration control, and emergency response. The DoD, while focused on military applications, has overlapping interest in domestic resiliency (e.g. responding to cyber-attacks or protecting critical infrastructure) and has deep pockets for R&D. The Pentagon has already been integrating AI (often via contractors) for national security purposesinvestingnews.com

- , and its involvement would bring funding and technical expertise – possibly repurposing defense AI tech for domestic use. Federal intelligence agencies (NSA, CIA, etc.) would also be key users/providers of data and may push for the AI system to enhance counterintelligence and anti-terrorism efforts on U.S. soil, albeit within legal limits.

- Civilian Government and State Agencies: Beyond security, a national AI would serve civilian agencies (health, transportation, finance) and state/local governments. State governments and large city administrations are stakeholders both as sources of data and end-users of AI insights. For example, state unemployment offices or public health departments could integrate with the AI to better predict economic trends or disease outbreaks in their region. Many state and local agencies are already adopting AI to improve public servicesepic.org

- – from automated fraud detection in welfare programs to traffic optimization in “smart cities.” These agencies will influence implementation by highlighting needs (e.g. an AI service that helps adjudicate benefits applications) and also by voicing privacy and civil rights concerns from their constituents. Successful rollout likely requires state-private partnerships in which local governments collaborate with federal authorities and tech firms (for instance, a state might host a regional AI data center or pilot a new policing AI under federal grants).

- OpenAI and AI Software Labs: Among private stakeholders, OpenAI stands out as a developer of advanced general models (like GPT series) that could form the cognitive engine of a governance AI. In fact, OpenAI is deeply entwined with emerging AI infrastructure plans: it has partnered with cloud providers and startups to expand compute capacity, even becoming an investor in some projectscoreweave.com

- . If a national AI system is implemented, OpenAI’s models (or those of rivals like Google DeepMind or Anthropic) might be licensed or adapted for government use, given their wide capabilities in language understanding, prediction, and data analysis. These companies have an interest in government adoption (a lucrative market and validation of their tech), but will also be concerned with how their models are used (to avoid reputational harm from oppressive applications). They may lobby for ethical guidelines or maintain some control over model deployment conditions.

- Cloud and Computing Infrastructure Firms: A robust AI “Colossus” needs immense computing power, making cloud infrastructure providers key players. This includes hyperscalers like Microsoft (Azure) and Amazon (AWS), as well as specialized AI cloud startups. Notably, CoreWeave and Crusoe are two emerging firms heavily focused on GPU-based AI compute. CoreWeave has secured an up-to $11.9 billion contract to supply OpenAI with dedicated compute capacitycoreweave.com

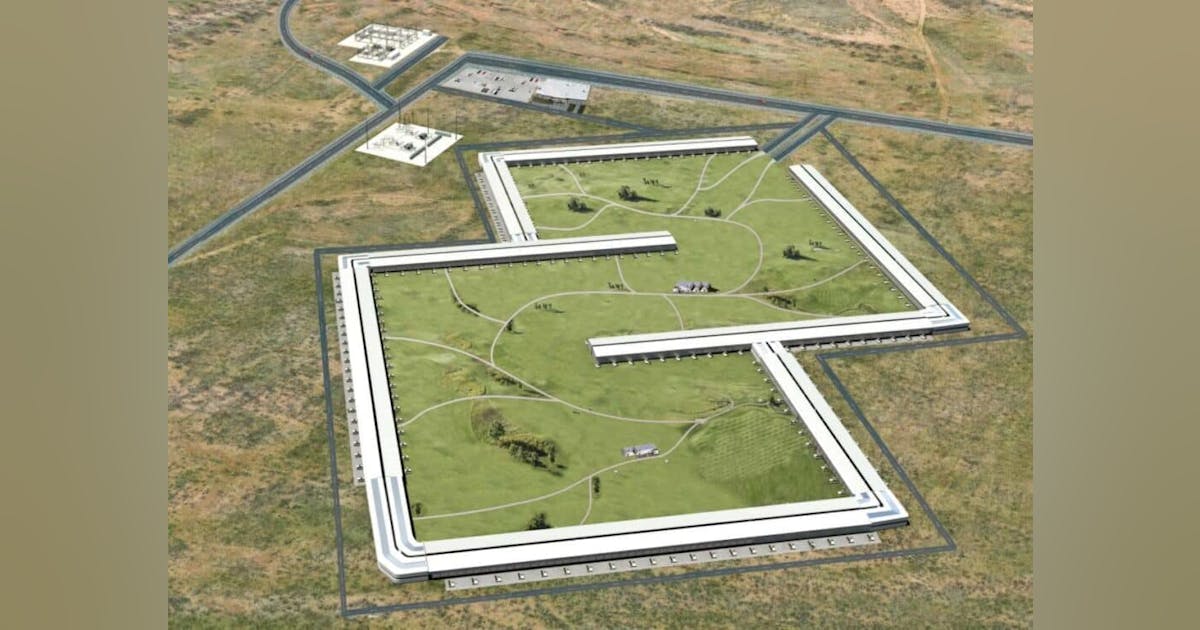

- , making it a de facto backbone for some of OpenAI’s workloads. Crusoe, similarly, has pivoted from cryptocurrency mining to AI and is building huge data centers in West Texas. In May 2025, Crusoe raised $11.6 billion to expand a new Abilene, TX facility to eight buildings (expected to become OpenAI’s largest data center)reuters.com

- reuters.com

- . These companies, along with established players like NVIDIA (providing GPUs) and Oracle (which, with OpenAI and others, launched the $500 billion “Stargate” AI infrastructure initiativereuters.com

- ), will likely form public-private consortia to build and operate the regional computing hubs. They bring technical know-how in data center design, GPU clusters, and cloud APIs. In a partnership model, they might receive federal subsidies or contracts in exchange for dedicating a share of their capacity to government AI needs. Their incentive is profit and market share, but also shaping standards – they would want the national system to perhaps use their cloud architecture or proprietary hardware.

- Logistics and Infrastructure Corporations: Interestingly, stakeholders may include companies not traditionally seen as “AI firms” but whose physical infrastructure and data are invaluable. FedEx, for example, operates the largest cargo logistics network in the country (hubs, vehicles, aircraft) and has been investing in AI-driven automation. In 2024 FedEx opened a fully automated sorting facility in Memphis (1.3 million sq. ft, with 11 miles of conveyor belts), equipped with 1,000 cameras and an AI-driven command center to monitor packages in real timenewsroom.fedex.com

- . This “smart hub” illustrates how private logistics infrastructure can produce real-time data flows (on shipments, movements, etc.) that a national AI might tap into for situational awareness of supply chains. FedEx and similar companies (UPS, Amazon’s logistics arm, freight railroads) could become partners by sharing data or even hosting AI nodes at their facilities, possibly in return for improved analytics from the national AI (e.g. predictions to optimize delivery routes during emergencies). Furthermore, critical infrastructure operators in energy and telecom (e.g. power grid companies) would be involved – the AI system would likely interface with smart grids and communication networks. These firms will require proper legal arrangements (to share data securely) and may join governance boards for the AI project to protect their interests.

- Techno-Ethicists and Civil Society: Although not “implementers,” academic and civil society experts in AI ethics, privacy, and law are crucial stakeholders influencing public opinion and policy constraints. Organizations like the ACLU, EPIC, or the Brennan Center for Justice are already monitoring government AI use. They advocate for transparency and safeguards – for instance, calls for an independent AI oversight authority to ensure civil liberties are protected in national security AI deploymentsbrennancenter.org

- brennancenter.org

- . These stakeholders will shape the legal scaffolding (discussed below) and could be included on advisory panels to the project. Public trust in a national AI system will depend on how well these voices are heard, given fears of a “Big Brother” AI. Thus, the implementing agencies might involve ethicists in design (to audit bias in algorithms, etc.) and in ongoing oversight roles.

Figure 1: Proposed organizational structure of a U.S. national AI system, illustrating key stakeholders and their relationships. The central AI authority (a new coordinating agency or inter-agency task force) would stand up the platform. It contracts with and draws innovation from private AI tech firms (OpenAI, CoreWeave, etc.) and guides deployment on infrastructure nodes (regional data centers and networks). Federal agencies like DHS and DoD provide funding, data, and use-cases (e.g. homeland security tasks), while receiving AI-driven insights back. State and local agencies connect via fusion centers and partnerships, feeding local data (e.g. crime reports, traffic data) into the system and using its analytics for on-the-ground action. Arrows above indicate partnerships: for example, DoD might channel advanced R&D to private firms, or DHS coordinates with state authorities to implement AI tools in local law enforcement. This structure highlights a public-private partnership model: government oversight ensures national objectives (security, equity) are met, while private innovation and infrastructure are leveraged to achieve scale.

Infrastructure Requirements for a “Colossus” AI

Implementing a centralized AI governance system will demand an extensive infrastructure, both physical and legal. Unlike a single supercomputer, this “Colossus” would consist of a network of regional AI nodes, high-speed data pipelines, and new policy frameworks to enable its operation across jurisdictions. Key elements include:

Distributed Compute Nodes and Data Centers

At the heart of the system are large-scale GPU compute zones—data centers packed with AI accelerators (GPUs or specialized AI chips) that can train and run massive AI models. These would serve as regional inference nodes, processing data locally and serving AI services to nearby areas to minimize latency. Early plans already point to such a topology. The Department of Energy, for example, identified 16 federally-owned sites (often at national labs or decommissioned facilities) as potential hosts for AI data centers, chosen for ready access to power infrastructure; the goal is to have these operational by 2027rcrwireless.com

. These sites include places like Idaho National Lab, the Pantex Plant in Texas, and other strategic locationsrcrwireless.com

, indicating a geographically distributed grid of compute. Significant power and cooling are needed for AI compute. Each node may consume hundreds of megawatts to gigawatts of electricity. The energy infrastructure therefore becomes a limiting factor and part of the design. Innovative solutions are emerging: Crusoe’s Texas campus is directly pairing with energy producers, having secured 4.5 GW of natural gas power via turbines to run its AI chipsdatacenterfrontier.com

. This approach of colocating data centers with power generation (including renewable or stranded energy sources) could be replicated to ensure the AI network’s reliability without overstressing public grids. Already, high-density AI campuses are under construction – in Prince William County, Northern Virginia, an “AI campus” is proposed to be the largest in the world, with land clearing and transmission lines going up, though not without local resistance to the noise and environmental impactsemafor.com

. Each regional node would house not only hardware but also data storage and edge computing as needed. For resilience, the architecture would be decentralized (if one node fails or is sabotaged, others can take over critical tasks). However, central orchestration would ensure models and data are synchronized across nodes. This likely means a core national operations center (or redundant centers) that deploy AI model updates and coordinates workload distribution. The infrastructure also extends to connectivity: dedicated fiber-optic links may connect these AI hubs to each other and to key data sources (similar to how major Internet exchange points connect). Northern Virginia’s robust fiber network and existing ecosystem of data centers is one reason it currently handles much of the US’s cloud infrastructuresemafor.com

, a feature any national AI would capitalize on. Finally, the system will leverage cloud computing paradigms – perhaps a hybrid of government-owned clouds and commercial clouds. Some nodes might be federal (at DOE labs or military bases), while others might be commercial data centers contracted to host government AI workloads. This mix raises interoperability issues, making open standards and cybersecurity paramount. The compute infrastructure is thus a web of high-power nodes with strong interconnections, strategically located for energy and data access. By 2030, one can envision major AI complexes in Texas (cheap land and energy), the Midwest, the Southeast (e.g. around Memphis or Atlanta for logistics data), Northern Virginia (near D.C.), and the West Coast (linking tech hubs like California). These will collectively act as the “brain” of Colossus.

Data Integration Networks (Fusion Centers and Sensors)

Large AI models are only as good as the data fed into them. For a governance AI, data will stream in from myriad sources: surveillance cameras, social media, financial transactions, satellite imagery, government records, and IoT sensors across smart cities. To manage this, the U.S. will need a robust data integration network. A likely foundation is the existing National Network of Fusion Centers, created in the post-9/11 era to facilitate information sharing. There are dozens of fusion centers, at least one primary center in each state and major urban areas, which serve as “focal points in states and major urban areas for the receipt, analysis, gathering and sharing of threat-related information between state, local, tribal, federal, and private partners”dhs.gov

. In essence, fusion centers already aggregate local intelligence (tips, incident reports, criminal records, etc.) and pass it to federal agencies (and vice versa). They provide “two-way intelligence flow” and a “unique perspective on threats to their state or locality”dhs.gov

that no single federal agency could replicate. A national AI could be layered on top of this network: fusion centers become data nodes, where local data is ingested, anonymized or standardized as needed, and then sent to regional AI data centers for analysis. Conversely, insights generated by the AI (say, a prediction of a protest turning violent or an identification of a cyber-attack pattern) can be distributed back through fusion centers to local law enforcement and officials. This integration would effectively turn fusion centers into the “senses” of Colossus, scanning the environment continuously. The fusion centers would likely require upgrades – new software platforms to interface with AI systems, possibly additional data feeds like live CCTV video or social media monitoring tools powered by AI. Notably, some fusion centers already partner with the private sector (e.g. receiving data from banks or utilities for infrastructure security), which aligns with the AI’s need to ingest private sector data in real-time (for example, an AI might use retail supply-chain data to detect economic disruptions, or ride-share usage data to detect unusual population movements during an emergency). Beyond fusion centers, other sensor networks would be integrated. Traffic cameras and license plate readers across the country could feed into a transportation AI module. Public health data (hospital admissions, pharmacy sales) could feed into an epidemic detection model. Environmental sensors (weather stations, air quality monitors) could go into climate and disaster response models. In many cases these networks exist but are fragmented by jurisdiction or sector; the national AI effort would weave them together. Legal scaffolding (next section) will be vital to enable data sharing at this scale, since current laws often silo data (for instance, state driver’s license databases or medical records can’t freely be shared for general surveillance). The technical need, however, is clear: a secure data pipeline connecting local nodes (sensors and fusion centers) to the computing core, with strong encryption and access controls to prevent misuse or breaches. Additionally, new data collection initiatives might be launched. For example, if social media monitoring is prioritized, there could be government AI agents crawling publicly available posts for sentiment and indicators of unrest (as some academic projects have done, detecting hostility in tweets to predict protest violencefastcompany.com

). Drones and satellites might supply real-time imagery of critical areas. The fusion of all these streams is itself a challenge; it requires not just raw data flow but a semantic integration – converting diverse inputs into a common format that AI models can understand. Part of the infrastructure development (perhaps in early stages) will involve creating data standards and shared repositories (a “national data lake”) to train and refine the AI. The presence of fusion centers and DHS’s experience in intel analysis will help, but the scale and scope would be unprecedented domestically.

Legal and Regulatory Scaffolding

To stand up a state-sponsored AI spanning the country, legal frameworks must evolve in tandem with technology. On one hand, enabling legislation and policies are needed to authorize and fund the AI system, define its mandate, and break down barriers to data sharing. On the other hand, regulations and oversight are crucial to address privacy, bias, and accountability, lest the system undermine civil liberties. This legal scaffolding can be thought of as both the “green light” for implementation and the guardrails to guide it responsibly. In terms of authorization, the federal government may issue executive orders and seek congressional support. Already, in early 2025 the White House moved to “clear a path” for decisive AI development by revoking older policies and asserting that the U.S. must “act decisively to retain global leadership in AI”whitehouse.gov

. This indicates high-level political will. We might see a new National AI Initiative Act II or similar, building on the 2020 act, specifically allocating funds for national AI infrastructure. The Department of Energy’s RFI on AI data centersrcrwireless.com

, and the identification of priority federal sites, suggests that statutory authority for rapid construction could be invoked (potentially akin to the Defense Production Act being used to expedite tech projects). State governments would also need to adjust laws if participating: for instance, state privacy laws (like California’s) might need exemptions to allow sharing data with federal systems for narrow purposes, or conversely, states may demand their own stricter rules for how an AI can use their citizens’ data. A critical legal component is data governance. The AI will rely on aggregating data that is currently protected by various laws (HIPAA for health, FERPA for education, Fourth Amendment requiring warrants for certain surveillance, etc.). New legal scaffolds might include: expansion of authorities under the PATRIOT Act or similar for AI-era surveillance, or new legislation explicitly allowing AI analysis of open-source intelligence (social media, etc.) for domestic security. Simultaneously, to maintain public trust, Congress could impose conditions – e.g. requiring algorithmic audits, mandating bias testing of any AI used in law enforcement to prevent discrimination, and giving citizens some recourse if they are adversely affected by an AI-driven decision (like being denied a service or flagged as a threat erroneously). The Privacy and Civil Liberties Oversight Board (PCLOB) or a new independent agency might be tasked to oversee the program’s impactbrennancenter.org

. Indeed, experts suggest that either PCLOB be strengthened or a new body created to provide “independent oversight of national security AI” with a mandate matching the sweeping scope of new systemsbrennancenter.org

. Another necessary legal scaffold is frameworks for public-private partnerships. Traditional procurement may not suffice; instead, the government might enter into long-term leases or joint ventures for data center capacity. Contracts (perhaps modeled on cloud-computing contracts like the JEDI or JWCC for the Pentagon) will define how companies like CoreWeave or Amazon provide services, who owns the data, and what security requirements are in place. Intellectual property issues also arise: if, say, OpenAI’s model is used for government analysis, how is its output treated (public record or not)? Who is liable if the model produces faulty guidance? These need clarification. Lastly, state-level AI regulations must be reconciled with the national system. States like Colorado have passed AI accountability acts requiring transparency in AI that impacts citizenswhitecase.com

. A national system would need to comply or seek preemption of such laws. We may see a push for a federal AI law to create uniform standards. For example, requiring that any AI used in “critical decisions” (policing, benefits eligibility, etc.) be tested for fairness and documented. The scaffolding thus is twofold: empowering the AI build-out (through funding, site leases, data-sharing MOUs) and constraining it (through oversight, privacy protections). The shape of this legal infrastructure will greatly influence how the system is perceived – as a benevolent aid to governance or a potential Orwellian tool. Early indications show some drift towards securitization (e.g. expanding fusion center missions beyond counterterrorism to “all crimes” monitoring of protestorsbrennancenter.org

), but also growing calls in Congress for AI Bills of Rights or at least transparency in government AI use. Balancing these will be an ongoing negotiation throughout the implementation period.

Implementation Model and Rollout Stages

Building a nationwide AI governance system is a complex endeavor that will likely unfold in phases. A plausible implementation model is a staged rollout coupled with pilot programs, gradually scaling up through public-private collaboration. Below is a stage-wise roadmap from 2025 through 2030, outlining how “Colossus” might come online: Figure 2: Proposed timeline (2025–2030) for implementing a U.S. state-sponsored AI system. Each year brings specific milestones, balancing infrastructure build-out, policy development, and pilot operations:

- 2025 – Planning and Pilot Programs: In the initial year, the federal government would formalize the vision. This could involve an Executive Task Force on National AI setting requirements and drawing up an AI Action Plan (as mandated by executive orderwhitehouse.gov

- ). Pilot projects would be launched to demonstrate value and test technology. For example, DHS might pilot an AI-enhanced fusion center in a willing state (ingesting statewide data to produce daily threat assessments via AI), or the Department of Transportation might test an AI traffic control system in a major metro. Meanwhile, partnerships with private firms would be negotiated. We might see announcements of contracts with cloud providers (indeed in early 2025 a nearly $12 billion deal with OpenAI/CoreWeave was struck to boost AI capacitycoreweave.com

- ) and site selections for new data centers (leveraging the DOE’s 16-site list). Public engagement begins here, with outreach to explain benefits and gather input, especially from high-tech regions poised to host facilities.

- 2026 – Infrastructure Build-Out and Legal Framework: By 2026, physical construction hits full stride. The large data center projects in Texas, the Southeast, etc., break ground or expand. Crusoe’s Abilene, TX campus for OpenAI, for instance, is expanded to 8 buildings from the initial 2, on its way to becoming the largest AI data center used by OpenAIreuters.com

- reuters.com

- . Similar endeavors might commence in Northern Virginia (where additional “AI zones” are approved despite local concerns) and possibly the Midwest. On the legal side, this year would see significant legislative or regulatory actions: Congress might pass an AI Infrastructure Act funding the initiative, and at state levels, MOUs are signed for data sharing with fusion centers. A regulatory framework starts taking shape; for example, guidelines for AI use in law enforcement could be issued by DOJ to ensure a baseline of fairness (responding to advocacy). By the end of 2026, several regional inference nodes (at least in prototype form) are online, and initial versions of the national AI platform software are tested integrating two or three pilot jurisdictions.

- 2027 – Regional Integration and Scaling: In this phase, the system shifts from isolated pilots to a networked rollout. The DOE aims to have operational AI data centers at some federal sites by end of 2027rcrwireless.com

- – this aligns with turning on multiple regional hubs. Fusion centers in different states are now linked through the central AI system, enabling cross-state data analysis for the first time. For example, if a suspected security threat spans three states, the AI could automatically correlate information from each state’s fusion center and alert authorities, something previously done manually. Private-sector cloud support ramps up: perhaps Amazon and Microsoft bring their GovCloud capabilities to supplement the dedicated centers, ensuring redundancy. Implementation models here likely involve federated learning or similar techniques so that local nodes can process sensitive data and share insights with the central brain without always transferring raw data (to address privacy). Also in 2027, we expect further policy refinement – perhaps the creation of a National AI Authority (an agency or interagency council) that formally takes charge of the system’s operation and maintenance. Critically, 2027 might be when early successes or failures become evident, prompting adjustments. For instance, if the AI helped reduce emergency response times in pilot cities by analyzing 911 calls, that success would be used to justify expansion; conversely, any high-profile errors (like a wrongful arrest due to an AI misidentification) would trigger calls for halting or adding more safeguards.

- 2028 – Initial Operational Capability: By 2028, the national AI system could reach an initial operational capability covering a broad swath of applications. Many federal agencies and a majority of states might be connected in some fashion. This year could see the AI actively assisting in major events – e.g. providing real-time analytics during a natural disaster or national special security event (like a large protest or election monitoring). The system will still be under careful observation; think of 2028 as a large-scale beta test. On the technology front, improvements are made: upgraded AI models (possibly next-generation multimodal models that handle video, text, and audio together) are deployed, and edge devices (like AI-powered cameras or local servers in police cars) are integrated to extend reach. User training is a focus now: thousands of government analysts, police officers, and officials train to work with AI outputs (trusting but verifying them). Stage-wise rollout also means some regions may have more advanced capabilities than others, allowing comparisons. For example, perhaps Los Angeles and New York have fully integrated smart city AI systems by 2028 (due to previous groundwork), whereas rural areas are slated for later. These variations create a natural experiment environment.

- 2029 – Expansion and Optimization: Having proved its value (one hopes) in prior years, 2029 is about expanding coverage and optimizing performance. More data sources are linked in – maybe financial transaction monitoring by Treasury to flag fraud, or expanded healthcare analytics by HHS for predicting drug abuse epidemics. The public-private partnership aspect might deepen; new entrants could join (for instance, if another company develops a superior AI chip or a novel algorithm, they might get a contract to upgrade the system). The system’s maintainers will also work on cost optimization: AI infrastructure is expensive (running millions of GPU chips), so efforts to improve energy efficiency are key. This might include locating new data centers near renewable energy farms or using techniques to schedule heavy computing tasks when power grid demand is low. By 2029, any remaining states or agencies not on board might be brought in, addressing holdouts or those that had reservations. Politically, there could be the first comprehensive oversight report to Congress on the system’s impacts, and if a new administration is in office by then, they will evaluate whether to continue, scale back, or pivot the approach. Regionally, experiments from earlier years (like specific predictive policing trials or automated service centers) are either scaled nationwide or discarded based on measured outcomes.

- 2030 – Full Operational Deployment: By 2030, the vision of a centralized AI governance infrastructure could be fully realized. In this stage, the system transitions to routine operation and is considered a permanent fixture of U.S. governance (much like DHS itself became after 9/11). It would be integrated into daily government workflows: analysts rely on AI summaries for intelligence briefs each morning; AI-driven forecasts inform the Federal Reserve and economic policymakers; city mayors get alerts from AI about emerging issues (crime spikes, infrastructure stress) in near-real-time. The focus in 2030 is on continuous improvement and addressing unintended consequences that have emerged. There may be spin-off benefits – for example, the same AI infrastructure might support scientific research or education (a National Research Cloud accessible to universities piggybacking on the government’s investment, which has been a topic of discussion in AI policy circles). The governance structure would also be finalized, perhaps with a dedicated unit (colloquially a “National AI Center”) maintaining the system and liaising with an oversight board and the tech partners. By this point, the U.S. would have a mature model for large-scale AI deployment that other nations might emulate or that might become part of international collaborations (or competitions). The success or failure of this 5-year effort will influence debates about AI’s role in society for decades to come.

Throughout these stages, iterative development and feedback loops are crucial. Implementation is not purely linear; there will be overlapping progress in technology, policy, and operations, each informing the other. The staged approach is designed to manage risk – starting small, proving value, and then scaling – in a domain that is both promising and perilous.

Societal Consequences and Implications

A government-run AI system on this scale would reverberate throughout American society. Its deployment raises profound questions about surveillance, labor, civil liberties, and the relationship between citizens and the state. Here we explore major implications, weighing potential benefits against risks.

Enhanced Surveillance and Civil Liberties

One immediate consequence of a “Colossus” AI is a dramatic expansion of surveillance capabilities. By knitting together cameras, databases, and social media feeds with powerful AI analytics, authorities could track individuals and groups in ways previously impossible. The United States has historically been wary of domestic surveillance overreach, but advanced AI may test those boundaries. The system could enable real-time facial recognition across CCTV networks, automatic flagging of “unusual” behavior patterns, and continuous monitoring of communications for certain keywords or sentiments. In effect, it inches toward a panopticon, echoing concerns raised by the example of China’s pervasive AI-driven surveillance of dissidentsbrookings.edu

. The Brookings Institution warned in 2025 that “AI surveillance may no longer just be a foreign government threat” and that abuses “within the United States” are a real concernbrookings.edu

. Indeed, as advanced AI falls into the hands of domestic law enforcement and intelligence agencies, the risk is a erosion of privacy and free expression. For instance, if the AI flags someone as a potential threat based on travel patterns or online posts, that person might be subject to investigation or added to watchlists without their knowledge, even if they’ve committed no crime. Large-scale data retention by the AI system (recording movements of millions of people) could become a default, effectively nullifying the idea that citizens are “left alone” when not suspected of wrongdoing. Civil liberties organizations are likely to challenge such practices, possibly in court, on grounds of Fourth Amendment (unreasonable search) or First Amendment (chilling effect on free speech, especially if protest activity is monitored). A concrete example of these tensions emerged with the State Department’s new AI-driven “Catch and Revoke” program, which scrapes social media to identify foreign students or visitors who voice support for designated groups and then revokes their visasbrennancenter.org

. In one case, a university student was arrested and had his visa revoked, seemingly in retaliation for campus protest activitybrennancenter.org

. If AI systems can be used to punish political speech (even of non-citizens), it sets a worrisome precedent for broader use against citizens, and highlights how AI lowers the cost of such monitoring (the system can scan far more information than human agents could). On the flip side, proponents might argue that an AI-enhanced surveillance apparatus could actually reduce bias and increase effectiveness in law enforcement. For example, instead of profiling based on race or neighborhood, police might rely on AI analysis of behavior, which if properly tuned, could be more objective. AI could also help quickly find missing persons or identify perpetrators (e.g. using facial recognition to catch an abductor on highway cameras). The key issue, however, is governance: without stringent oversight, the temptation for misuse is high. History offers caution: fusion centers, which would feed the AI, have already been caught monitoring lawful activists across the political spectrum with dubious justificationbrennancenter.org

. By 2030, surveillance expansion might mean not just watching public spaces but analyzing personal data (like purchasing habits, location from smartphones) in real time – a level of intrusion Americans haven’t experienced at scale. This could fundamentally alter the citizen-government relationship, making many feel they are perpetually observed by an algorithmic guardian (or warden). Societal trust in the system will hinge on transparency and control measures: e.g. water-tight audit logs, the ability for independent auditors to review what the AI is querying or flagging, and strong consequences for abuse. In summary, enhanced surveillance is a double-edged sword: it might improve security and response times, but at significant cost to privacy and possibly democracy, if not managed within the rule of law.

Automation of Labor and Public Services

A national AI platform would also accelerate automation in the workforce, especially in government services and related industries. By design, such a system automates tasks that were previously done by humans: data analysis, report generation, even decision-making in areas like resource allocation or applicant screening. In government agencies, this could lead to a reduction in certain job roles. For example, consider public benefits programs (Social Security, food assistance, etc.): if AI systems handle initial applicant risk scoring or fraud detection, fewer human caseworkers might be needed for those functions. The Electronic Privacy Information Center notes that governments are already using AI to “expand or replace” functions such as law enforcement monitoring and public benefit decisionsepic.org

. Often these systems are “faulty and opaque”, which raises the concern that replacing human judgment with algorithms may lead to errors that are hard to contestepic.org

. If a centralized AI denies someone’s loan or flags a worker as “non-essential,” how does one appeal an algorithm? Labor unions may push back on unwarranted automation, insisting on human review for important decisions. Beyond government offices, the private sector will likely mirror the AI adoption. The national system could drive standards and provide tools that companies use, hastening automation in fields like transportation, logistics, and customer service. The case of FedEx’s Memphis World Hub is illustrative: it invested in a 1.3 million sqft automated facility, with AI-driven scanning and tracking, capable of sorting 56,000 packages per hour with minimal human interventionnewsroom.fedex.com

. That level of automation directly impacts labor – what once required many hundreds of workers on sorting lines can now be managed by a smaller crew overseeing machines and algorithms. As national AI infrastructure develops, similar automation could spread: autonomous trucks on highways (with AI coordinating their routes), AI-operated “fusion” call centers handling 911 calls or citizen inquiries with minimal human handoff, etc. While this promises efficiency and potentially lower operational costs for governments (and thus taxpayers), it also means workforce displacement issues must be addressed. Government roles in data entry, surveillance monitoring, routine analyses, and even middle management could be thinned out. The economy at large might see productivity gains, but also dislocation for segments of workers – particularly mid-skill white-collar jobs that involve a lot of information processing. On the positive side, automation via AI could improve service quality if done right. Citizens might get 24/7 responsive services (through AI chatbots or virtual assistants for government websites), faster processing of permits and licenses (the AI can cross-verify documents instantly), and more personalized public services (AI analyzing one’s records to proactively offer programs or schedule health interventions). The “smart city” aspect means things like traffic lights automatically adjusting to flows (reducing commute times), and utilities predicting outages to fix issues preemptively. All of this could enhance daily life, effectively raising the standard of governance. The trade-off is the human element: some people may feel alienated dealing with impersonal AIs instead of human clerks, especially in sensitive contexts like healthcare or social support. From a societal viewpoint, labor automation might widen inequality if not managed – tech-savvy workers and AI specialists gain, while those in automated-away roles lose. It places a premium on retraining programs. The government might use the AI itself to forecast labor market shifts and guide policy (e.g. identifying regions where job loss is likely and focusing economic development or education resources there). The timeline of 2025–2030 is short to see massive labor market changes, but it will set trends. By 2030, we might see some government offices that are “AI-first”, employing relatively few people because AI handles the bulk of work. Society will need to adapt its expectations – perhaps measuring success of public institutions not by number of employees but by outcomes delivered. There’s also a scenario where automation frees up human workers to focus on more complex, interpersonal tasks: e.g. police spending less time on paperwork and more on community engagement, because AI handles the paperwork. Whether the net effect on labor is ultimately positive or negative will depend on policy choices made alongside the AI rollout (such as ensuring there is a plan for displaced workers). Nonetheless, automation of labor is an inevitable consequence of implementing a system designed to optimize and streamline on a national scale.

Predictive Policing and Protest Monitoring

A particularly sensitive application of a national AI is in predictive policing and the monitoring of social unrest. Using AI to forecast crime or social disorder has been experimented with at local levels, and a national system would supercharge these capabilities. By analyzing historical crime data, real-time incident reports, and even social media chatter, the AI could predict hotspots of criminal activity or likelihood of protests/protests turning violent. While the intention is to allow law enforcement to preempt crime or prepare for crowd control, the experience so far shows pitfalls. For example, the Los Angeles Police Department was a pioneer in data-driven policing programs like PredPol and Operation LASER, which purported to predict crime and identify chronic offenders. However, analyses showed these programs mainly “validated existing patterns of policing” – meaning they often reinforced bias, disproportionately targeting Black and brown communities and areas historically over-policedtheguardian.com

. After public outcry and evidence of harm, LAPD ended those programs around 2020theguardian.com

. This serves as a cautionary case: if a local predictive system led to automating harmful police practices, a larger AI could amplify such issues across the nation. Despite the risks, the allure of predicting crime is strong. A national AI could integrate diverse data (from 911 calls, weather, event schedules, etc.) to forecast, say, an increased burglary risk in a neighborhood next week, allowing preventive patrols. Some research even shows AI can parse social media to predict when protests will turn violent – one AI system scanned 18 million tweets from the 2015 Baltimore protests and found that spikes in “moral outrage” tweets correlated with, and could predict, increases in arrest rates (a proxy for violence) during the protestsfastcompany.com

. Such capability in the hands of federal agencies means they could monitor online discourse nationwide and alert local police if a particular demonstration is likely to escalate. The Department of Homeland Security might also use it to allocate resources (like sending federal protective services to cities anticipating unrest). However, this edges into thought policing territory. People might fear expressing dissent online if they believe AI surveillance tags protest organizers as potential rioters. The distinction between legitimate protest and incitement could be blurred by algorithmic judgment, potentially leading to preemptive crackdowns. The Brennan Center highlighted that in the current climate, advanced surveillance has been used to target even peaceful protestors under broad labelsbrennancenter.org

– with AI, that targeting could become more efficient and widespread. For society, this raises First Amendment alarms. Will freedom of assembly and speech be chilled by omnipresent AI eyes? If, for instance, organizers notice drones overhead at every protest and suspect an AI is cataloguing participants, some may stay home. In communities of color or activists’ circles, trust in law enforcement, already fragile, could erode further if they suspect an AI is reinforcing systemic biases (a fear justified by earlier predictive policing programs). Mitigating these consequences requires transparency (making public what criteria the AI uses, perhaps via community oversight boards) and setting strict limits on how predictive policing insights are used (for example, prohibiting their use as sole grounds for search warrants or arrests, to avoid dystopian “pre-crime” scenarios). That said, when used carefully, such AI predictions could improve safety—for example, if the AI accurately predicts a protest will be peaceful, police might adopt a less aggressive stance, avoiding unnecessary confrontations. Or predictive analytics might highlight underlying causes of crime (like pinpointing areas with blight and poverty that need social services rather than more policing). In the best case, the AI becomes a tool for preventative, holistic interventions (sending outreach rather than just officers). Yet the central concern is accountability: unlike human decision-makers, algorithms are opaque and unaccountable to the public. Ensuring the system does not overstep will be one of the biggest societal challenges.

Social Narrative Shaping and Information Control

Another consequence of a centrally coordinated AI is its potential use in shaping public narratives and controlling information. In essence, the state could leverage AI to influence what society believes or discusses. This can happen overtly—through public messaging informed by AI—and covertly, via algorithmic manipulation. On the overt side, a national AI could analyze public opinion at scale (scraping posts, news, blogs) and identify trends or emerging concerns. Government agencies might use this to tailor their communications or deploy targeted information campaigns. For example, if the AI detects growing public fear about a pandemic or unrest, officials could rapidly craft press releases or social media posts (possibly even using AI to generate empathetic language) to steer the narrative, dispel rumors, or maintain calm. This could be benign – even beneficial in crisis communication, ensuring accurate info spreads faster than misinformation. However, the line into propaganda is thin. With AI, the government might simulate how messages will be received by different demographics and fine-tune them to achieve desired emotional impact, essentially A/B testing propaganda in real-time. Covertly, the government could employ bots and deepfakes to seed narratives, and an AI system can manage a whole army of such bots effectively. While democracies have norms against domestic propaganda, some instances have come to light (for instance, military-linked personas that were removed from social platforms for spreading U.S.-aligned narratives abroad). Domestically, one could envision an AI that floods extremist forums with counter-narratives or that subtly boosts certain viewpoints in online discourse to marginalize others. This raises profound ethical issues about manipulation of the information ecosystem. It could also backfire, as people lose trust in media if they suspect it’s orchestrated by AI psy-ops. Furthermore, controlling “narrative” can extend to censorship. An AI that monitors social media might be tasked with flagging “disinformation” or “dangerous content.” While combating disinformation (e.g. foreign influence campaigns or harmful conspiracy theories) is important, civil libertarians worry that these labels can be applied in a biased way to stifle legitimate debate. We saw controversy with DHS’s mooted Disinformation Governance Board; a powerful AI might effectively become that board’s functionary behind the scenes. For instance, if authorities decided that certain political ideologies correlate with domestic terrorism, an AI could mark content from those ideologies for throttling or scrutiny. This is not hypothetical—commercial platforms already use AI content moderation that sometimes erroneously flags journalism or activism as extremist. In government hands, mistakes could have legal ramifications (e.g. launching investigations off a bad AI flag). On the other hand, narrative-shaping AI could help protect democracy if used to counteract foreign propaganda. The system might quickly identify deepfake videos or fake news spread by adversaries and help publicize corrections. It’s a tool: it can either enlighten or manipulate, depending on usage. The societal consequence is a potential erosion of the line between truth and engineered consensus. If citizens come to feel that the “official story” on any event is backed by an AI’s selective presentation of facts, trust in institutions might decline further. Maintaining a healthy information space in the AI era likely requires new norms and oversight. Possibly, Congress would demand reports on any AI-driven information campaigns run by the government, even if for benign purposes. Transparency could involve watermarking content created or amplified by government AIs. Tech platforms, too, are stakeholders here—they may need to flag or limit government-coordinated bot activity. Ultimately, society will need to be vigilant that “Colossus” does not become a Ministry of Truth. This is a key ethical frontier for techno-ethicists: ensuring AI serves an informed citizenry rather than nudging the citizenry into the government’s preferred mindset.

Regional Experimentation and Uneven Impact

As the national AI system rolls out, it likely won’t affect all regions uniformly. There will be regional experimentation, whether by design or due to varying local conditions. Some states or cities may enthusiastically adopt and pilot the new AI tools, effectively becoming laboratories. For example, Texas – with its tech-friendly policies and existing big AI investments – might push the envelope on using AI for border security or disaster management (hurricane response) within its borders, offering a model for others. Meanwhile, states like California may be more cautious, implementing stricter privacy safeguards or initially limiting AI usage in policing, thus creating a contrasting model. This uneven implementation can have several consequences. One consequence is that citizens’ experiences will differ by location. A resident of Memphis, Tennessee, for instance, might become accustomed to significant AI presence: Memphis is already seeing major AI infrastructure investment (Elon Musk’s xAI is building a supercomputer there and a separate $500M AI data center project was announcedactionnews5.com

), and the city government is adopting AI in public services. If Memphis were an “early adopter” city, by 2030 its police might use AI predictive tools daily, its traffic systems might be autonomously managed, and residents might interact with AI-driven kiosks at city hall. Compare this to a smaller city in, say, the Northeast that might not integrate much AI until later – their experience with “Colossus” could lag, potentially creating a sense of inequity or even a digital divide between AI-augmented regions and those without. There is also a risk that if something goes wrong in one region’s experiment (say an AI policing pilot in Los Angeles leads to controversy due to biased outcomes), it could sour public opinion in other regions, delaying broader adoption. Regional experimentation can also mean policy sandboxing: trying different rules in different places. Perhaps one state allows AI facial recognition use by police, while another bans it pending further study. These experiments will inform the national framework, but they might also cause friction (if, for example, criminals or illicit actors migrate activities to regions where they know AI surveillance is restrained). Regions might compete to attract AI-related investment, touting either lax regulations (to woo companies) or strong ethical frameworks (to woo public trust and talent). For instance, Northern Virginia’s massive data center boom has brought economic benefitssemafor.com

, but also NIMBY opposition and environmental strainsemafor.com

. If one region’s residents successfully push back – as seen in parts of Virginia and Georgia where locals fought new data center constructionsemafor.com

– the project might shift to more welcoming locales. That means the distribution of infrastructure (and thus jobs and economic boost) could change based on local sentiment. Another aspect is cultural and social experimentation. The introduction of AI in community governance might be met with different attitudes. Some communities (perhaps with higher trust in tech or government) could embrace, even volunteer data (for example, opting into community health monitoring programs). Others, with historical reasons to distrust surveillance (e.g. Black communities who faced COINTELPRO or over-policing), might resist or demand community control over how AI is used. This could lead to interesting local governance innovations, like city councils setting up civilian oversight committees specifically for AI systems, or even deciding to “pull the plug” if AI policing is deemed too harmful, as Oakland, CA did by rejecting facial recognition use. Such local pushback or adaptation will influence the national trajectory by highlighting what strategies gain public acceptance. In positive terms, regional trials allow learning and course-correction. The federal implementers can treat early-adopter cities as proof-of-concept demonstrators. Perhaps Memphis becomes a showcase of AI integration in logistics (with FedEx’s automated hub and xAI’s computing center fueling local tech growth), while Los Angeles provides a cautionary tale in policing that leads to more robust bias training for the AI. Northern Virginia might demonstrate how to manage an AI boom responsibly (investing in grid upgrades and noise reduction for data centers after hearing residents’ complaintssemafor.com

). Over time, best practices would emerge from these varied trials. However, the uneven rollout can also create tensions between regions and the federal government. States that feel the system is imposed on them may bristle (echoes of Real ID Act resistance, for example). Conversely, if some states eagerly implement and others opt-out, criminals or malicious actors could exploit gaps (“safe havens” free of AI monitoring). The federal government might then exert pressure to unify adoption, potentially sparking states’ rights debates. In summary, regional experimentation is both a feature and a bug: it provides valuable data on societal impact and technical performance in different contexts, but it also means the benefits and burdens of the AI system will not be evenly distributed at first, with potential political and social repercussions.

Early Signals and Case Studies

While a fully integrated national AI system is still on the horizon, we can observe early signals in specific locales that hint at how such a system might take shape. Investments and pilot programs in certain states and cities foreshadow elements of the Colossus infrastructure. Here we examine four case studies: Texas, Memphis (Tennessee), Northern Virginia, and Los Angeles – each illustrating different facets of the coming AI governance landscape.

Texas: “Neocloud” Infrastructure and Energy Synergy

Texas has rapidly become ground zero for massive AI infrastructure projects, highlighting the scale and ambition behind a future national system. In Abilene, TX, startup Crusoe is constructing a gargantuan data center campus in partnership with major AI players. Originally a crypto-mining outfit, Crusoe pivoted to AI cloud services and has been tapped to build the first data center for “Stargate” – a venture backed by OpenAI, Oracle, and SoftBank aiming to spend up to $500 billion on AI infrastructurereuters.com

. Recent funding of $11.6 billion expanded Crusoe’s Abilene site from 2 buildings to 8, raising its capacity dramaticallyreuters.com

. This facility is projected to be the largest dedicated to OpenAI’s needsreuters.com

, indicating OpenAI’s strategy to reduce reliance on traditional cloud (like Microsoft) by essentially building its own cloud in Texas. Texas’ appeal lies in its abundant cheap land and energy. West Texas is dotted with wind farms, solar arrays, and natural gas fields. Crusoe exemplifies energy-AI synergy: it secured 4.5 GW of natural gas power (via a joint venture) to directly fuel millions of AI chips in its data centersdatacenterfrontier.com

. By using “stranded” natural gas that might otherwise be flared (wasted), they power AI computations sustainably while mitigating emissionsdatacenterfrontier.com

. This model aligns with Texas’ identity as an energy state and could be a template for powering energy-hungry national AI nodes without overloading public grids. The Pantex Plant in the Texas Panhandle, a federal nuclear facility, is also on the DOE’s shortlist for AI data center sitesrcrwireless.com

– possibly leveraging existing power infrastructure and secure land for a government-run node. Politically, Texas’s leadership is keen on being a tech hub and generally opposes heavy tech regulation, making it a friendly environment for such projects. We might expect state incentives or streamlined permitting for AI facilities (though local communities may raise environmental or noise concerns). Texas also has unique use-cases – for instance, border security could be a major application for AI (monitoring the lengthy Texas-Mexico border with drones and sensors). A state like Texas might gladly pilot an AI-integrated border surveillance system, which then could be replicated federally. In summary, Texas offers a case study in AI infrastructure at scale: enormous investment, integration with the energy sector, and public-private collaboration (Crusoe with OpenAI/Oracle). It demonstrates the feasibility of quickly ramping up capacity (billions invested within months) and hints that key parts of a U.S. AI network could be privately built but nationally purposed. However, it also raises questions about governance: these facilities are largely corporate-run – will there be public oversight or guarantees that their resources are available for national needs? The Texas case underscores that hardware backbone of the national AI is already being laid.

Memphis, Tennessee: Logistics Hub and Local Resistance

Memphis, home to the FedEx World Hub and strategically located in the mid-South, is emerging as an unexpected AI hotspot. Two notable developments signal how AI might integrate with both private industry and local governance here. First, the FedEx Institute of Technology at University of Memphis has been working on AI solutions for logistics, with FedEx investing in research like predictive fleet maintenance systemsmemphis.edu

. More visibly, FedEx’s new automated sort facility (opened 2024) at the Memphis hub showcases a microcosm of AI-assisted infrastructure – thousands of packages per hour handled by AI-driven machinery with minimal human inputnewsroom.fedex.com

. The hub’s “Operations Command Center” is effectively a local “brain” optimizing FedEx’s networknewsroom.fedex.com

. One can imagine a national AI tapping into such command centers at major companies to sense logistical flows (for instance, detecting supply chain disruptions in real time via FedEx data). Second, Memphis has attracted major AI compute investments beyond FedEx. In mid-2024, Elon Musk’s new AI startup xAI surprised many by choosing an old factory in Memphis to build one of the world’s most powerful supercomputers. The project moved “at breakneck speed”, repurposing an industrial site into a tech centernpr.org

. The community’s response foreshadows issues the national AI build-out may face: Musk’s project caused “turmoil over smog”, as the huge power demands led to concerns about increased emissions from local power plantsnpr.org

. Memphis Light, Gas & Water (MLGW) had to plan significant power and water provisioning for the facilitybizjournals.com

, indicating how local utilities will be critical partners for any large compute node. The EPA even began looking into the environmental impact, given Memphis’s existing air quality challengesnpr.org

. Additionally, residents raised questions about noise and land use, echoing the NIMBY sentiments heard in Virginia. Despite those concerns, Memphis officials see an economic boon: xAI’s multi-billion dollar project and another planned 1.1 million sq ft data center (by a company referred to as “5C”) promise hundreds of jobs and high-tech diversification for a city historically reliant on logistics and manufacturingactionnews5.com

. This dynamic – weighing economic growth against environmental/community impact – is a microcosm of what many regions will experience as AI infrastructure spreads. The Memphis case also highlights how private AI initiatives intersect with public infrastructure: the supercomputer project needed city cooperation for utilities, while FedEx’s automation might influence labor markets and traffic patterns in the city. For a national AI perspective, Memphis suggests that logistics hubs (Memphis is within a day’s drive of much of the U.S. population) are logical places to situate AI computing and applications. The city could become a pilot for using AI to manage civil infrastructure too. Indeed, Memphis’s city government has been using tech and AI to improve services, like predictive analytics for code enforcement and 311 systems (according to local tech reports). It might volunteer to pilot integrated city-wide AI that ties together crime data, utility data, and logistics info to optimize city operations. If successful, such a model could be exported to other mid-sized cities. However, Memphis also sends an early warning: even in a tech-embracing project, environmental and social consequences (pollution, energy strain, community consent) cannot be ignored. The xAI supercomputer’s controversy shows that obtaining public buy-in is crucial. The fact that the EPA intervened indicates that future AI builds will increasingly be scrutinized for sustainability. For the U.S. to build Colossus, it may need to ensure green energy use and community benefits to avoid backlash. Memphis, in sum, exemplifies both the promise (economic revitalization, AI integration in key industries) and the challenges (environmental impact, local approval) of deploying big AI systems at the local level.

Northern Virginia: Data Center Alley and Governance Challenges

Northern Virginia – particularly Loudoun, Fairfax, and Prince William counties – offers a glimpse into the opportunities and growing pains of hosting the backbone of a national AI. Often called “Data Center Alley,” this region handles an estimated 13% of the world’s data center capacity and is the largest market globallyrga.lis.virginia.gov

. The concentration of facilities (over 275 data centers) grew with the rise of cloud computing, as companies like Amazon, Microsoft, and Google built huge server farms there. Now, AI infrastructure is layering on top of that. The region has an “existing ecosystem for data centers, an AI-supportive governor, a robust fiber optic network, and available land”semafor.com

– ideal conditions for continued build-out. Indeed, plans for what might become the world’s largest AI campus in Prince William County (with more than 20 data center buildings) are underwaysemafor.com

. Major tech firms are expanding there: Amazon reportedly announced $35 billion more investment in VA data centers by 2040, some of which will support AI services. The Northern Virginia case study is instructive for governance. For years, data centers were seen as a purely positive force locally – providing tax revenue with little need for public services. But as the scale grew, residents began to push back. Complaints center on noise pollution (the constant hum of giant cooling fans), visual blight (industrial buildings near residential areas), and power infrastructure strainsemafor.com

. A 2024 state advisory report noted that utilities have to expand generation and transmission to serve the data centers at costs that will take decades to recoupsemafor.com

. In Prince William’s “Digital Gateway” proposal, citizens organized to slow or alter the projects, worrying about environmental impacts and the proximity to a Civil War historical site. This led to political action: some local officials who supported unfettered data center growth lost elections. The state government, however, vetoed a bill in 2023 that would have imposed more transparency on data center development, signalling an official stance in favor of growth (Governor Glenn Youngkin argued it could deter investment)wtop.com

. This highlights a governance challenge: balancing national/high-level goals with local community interests. Northern Virginia may host critical national security data centers (the Intelligence Community’s cloud is largely in VA, for instance), and likely will host key nodes of any national AI. But if local sentiment turns hostile, it could slow projects or force costly modifications (like sound-proofing facilities or restricting locations). Recognizing this, Congressman Suhas Subramanyam (whose district is in VA) has called for a “National Strategic Data Center Plan” to guide growth and address community concernssubramanyam.house.gov

. A strategic plan might spread facilities more evenly or set best practices for environmental mitigation. Another angle is that Northern Virginia is a nexus of public-private interaction. Many data centers are owned by commercial companies but often serve federal clients (through contracts like the Pentagon’s JWCC cloud or various civilian agency clouds). The region’s workforce and culture reflect that blend of government and tech. If a national AI authority is created, it might well be headquartered in the D.C. metro area, tapping into this talent pool. Already, one could argue an informal national AI exists here: agencies rely on Amazon and Microsoft data centers in VA daily. The formalization via Colossus would cement Northern Virginia’s role, possibly prompting initiatives to ensure security (the area is, unsurprisingly, a prime cyberattack target given the density of critical servers). To sum up, Northern Virginia demonstrates the infrastructure backbone realities: enormous capital investment (Amazon’s $75 billion+ so farsemafor.com

), integration with power and fiber networks, and the need for government coordination. It shows that physically hosting the “brain” of an AI nation has real impacts on communities – something planners must proactively manage through inclusive processes. Otherwise, the very places powering America’s AI future could become battlegrounds of controversy. The lesson from VA is that transparency, community engagement, and strategic planning are as important as technical prowess in implementing national-scale AI.

Los Angeles, California: Early Adopter and Ethical Lessons

Los Angeles provides a case study of early AI-related deployment in governance, especially around law enforcement, and the societal lessons learned. As the second-largest city in the U.S., L.A. has been both innovative and cautionary in its use of data analytics and AI for public safety. The Los Angeles Police Department (LAPD) was one of the first in the nation to experiment with predictive policing, touting programs like PredPol (to forecast crime locations) and Operation LASER (to identify chronic offenders or potential criminals)theguardian.com

. These programs, initiated in the 2010s, were essentially precursors to what a national AI might do at scale: crunching historical data to guide policing activities. For a while, LAPD leadership claimed success with these tools. However, investigative journalism and community activism later revealed serious flaws. The data-driven policing often reinforced bias, leading officers to over-police neighborhoods of color (since historical data reflected biased enforcement there)theguardian.com

. Individuals labeled by the system as likely offenders faced intensive police attention (e.g., frequent stops) without having committed new crimes – a kind of AI-driven suspicion that many argued violated civil rights. Amid public outcry and a scathing report by the Stop LAPD Spying Coalition (titled “Automating Banishment”), the LAPD ended PredPol and LASER by 2020theguardian.com

. The L.A. experience illustrates a key ethical point: AI in policing can automate existing injustices if not carefully checked. It shows why simply adopting an AI system nationally, without proper oversight, could be dangerous. The city became a hub of community pushback, with tech-savvy advocacy groups emerging to scrutinize surveillance tech. This has influenced policies; California as a state has since taken stronger positions on oversight (like the ban on police use of facial recognition on body cams). However, Los Angeles also highlights how AI might integrate beneficially in other civic areas. The city has a burgeoning tech sector and has applied AI in services like traffic management (L.A. has tested AI for traffic signal optimization to reduce congestion) and in utilities (the Los Angeles Department of Water and Power uses AI for grid management). Moreover, L.A.’s vast surveillance camera network, initially set up for anti-terrorism, now feeds into a “real-time crime center” where analysts monitor live feeds. In the future, tying that into a national AI could help solve crimes faster (for instance, tracking a getaway car across jurisdictions). L.A. also has a major fusion center (JRIC) that coordinates intel for the region; such a node would be vital in any national AI data sharing, and L.A.’s experience with fusion centers (both good and bad) can inform best practices. Culturally, Los Angeles (and California at large) might be more skeptical about a federal “Colossus.” California has enacted the California Consumer Privacy Act (CCPA) and more recently the California Privacy Rights Act (CPRA), giving individuals rights over data that could conflict with seamless sharing into a federal AI system. We might expect California to demand stronger privacy protections in any national framework – a balancing influence. At the same time, California’s economy includes Hollywood and a huge creative sector; interestingly, AI could be used in narrative shaping here, but also has risks (like deepfakes affecting media). Hollywood and Silicon Beach (L.A.’s tech hub) might become voices in the debate on how AI should be regulated (for example, pushing for watermarks on AI-generated content). In essence, Los Angeles stands as a lesson provider. It shows early adoption can lead to early backlash if community interests aren’t respected. Any national AI implementation will need to incorporate mechanisms to avoid the pitfalls L.A. encountered – such as ensuring transparency of algorithms used in policing, involving community stakeholders from day one, and committing to not use AI as a blunt instrument on vulnerable populations. L.A.’s mix of high-tech and grassroots activism likely means it will continue to be a key battleground for AI ethics. As one of America’s trend-setting cities, what happens in L.A. – the successes and failures with AI – will influence public perception nationally. For Colossus to succeed, it must prove in places like Los Angeles that it can be fair, accountable, and actually solve problems without creating new ones.

Conclusion

The prospect of a U.S. state-sponsored AI governance system (“Colossus”) by 2030 is both awe-inspiring and unsettling. Technologically, it promises to knit the nation’s data and decision-making into a responsive, intelligent network – one that could predict disasters, optimize services, and neutralize threats with unprecedented speed and precision. Economically, it represents an enormous investment in the future, positioning the U.S. to lead the next wave of the digital revolution and perhaps yielding efficiency gains that benefit all citizens. The early moves in Texas, Memphis, Northern Virginia, and Los Angeles show that pieces of this future are already falling into place, driven by both private innovation and public initiative. Yet, as this investigation has detailed, the challenges and risks are profound. A domestic super-AI raises fundamental questions about power and liberty: How do we prevent an all-seeing AI from morphing into an instrument of authoritarian control? Can we program accountability, transparency, and ethics into a system that operates at a scale and complexity beyond direct human comprehension? The political rationale to compete with adversaries and “out-innovate”fedscoop.com